P6-Reporter to Power BI - An Enterprise Architecture Upgrade from Gateway-Only to Fabric-Ready

Who this is for: Teams running Primavera P6 software with project data generated by P6-Reporter and stored in a self-hosted Oracle database, who want to light up Power BI—and now also Microsoft Fabric—without re-platforming their core systems.

Executive Summary

We’ve upgraded our analytics architecture from a single lane (Gateway → Power BI) to a multi-lane, Fabric-ready highway. The result: the same trusted P6-Reporter data, now consumable through Fabric Warehouse in addition to the classic Gateway path. That means more flexibility for your BI teams, better governance options, and a clearer path to scale—without changing how P6-Reporter creates data.

Before → After (at a Glance).

Before: Oracle (on‑prem) → On‑premises Data Gateway → Power BI ServiceAfter: Oracle (on‑prem) → Gateway + Data Pipelines → Fabric Warehouse → Power BI Service

Why this matters: You can keep your P6-Reporter-powered database as is, and choose the most suitable consumption pattern—classic Gateway refresh or Fabric’s cloud data layer—project by project.

Why Call it an Architecture Upgrade?

- Choice, not churn. We add a Fabric lane alongside your existing Gateway lane. No forced migration; pick what’s right for each report.

- A governed cloud data layer. Fabric Warehouse offers a central SQL endpoint for modeling and reuse across many Power BI datasets.

- Operational resilience. Pipelines orchestrate loads (full + incremental), isolate failures, and align refresh with business cadence.

- Future-proofing. A stepping-stone to Lakehouse, notebooks/ML, and enterprise-grade data engineering—whenever you’re ready.

The P6-Reporter Context

- What P6-Reporter does: Automates snapshots and curated structures from Primavera P6 so that project teams can track progress and changes, gather resource productivity, and see trends without wrestling with raw P6.

- Where the data lives: In your self-hosted Database—close to your operational systems and security controls.

- What’s new today: In addition to the classic connection, you can now sync those same P6-Reporter tables into Fabric Warehouse and point Power BI at a SQL endpoint in the Microsoft Azure cloud.

Target Architecture After the Upgrade

- Database (on-prem): P6-Reporter data lives here.

- On-premises Data Gateway: secure outbound bridge.

- Fabric Data Pipelines: copy/sync (full + incremental).

- Fabric Warehouse: governed SQL endpoint for BI.

- Power BI: reports & semantic models published to the Service.

End-to-End Walkthrough

0) Prerequisites

- Workspace bound to Fabric F-capacity.

- On-premises Data Gateway (standard mode) online with the P6-Reporter data source configured; connection test passing.

- Access rights: Workspace Admin/Member, permission to use the Gateway data source.

- Power BI Desktop that supports Get Data → Microsoft Fabric → Warehouses.

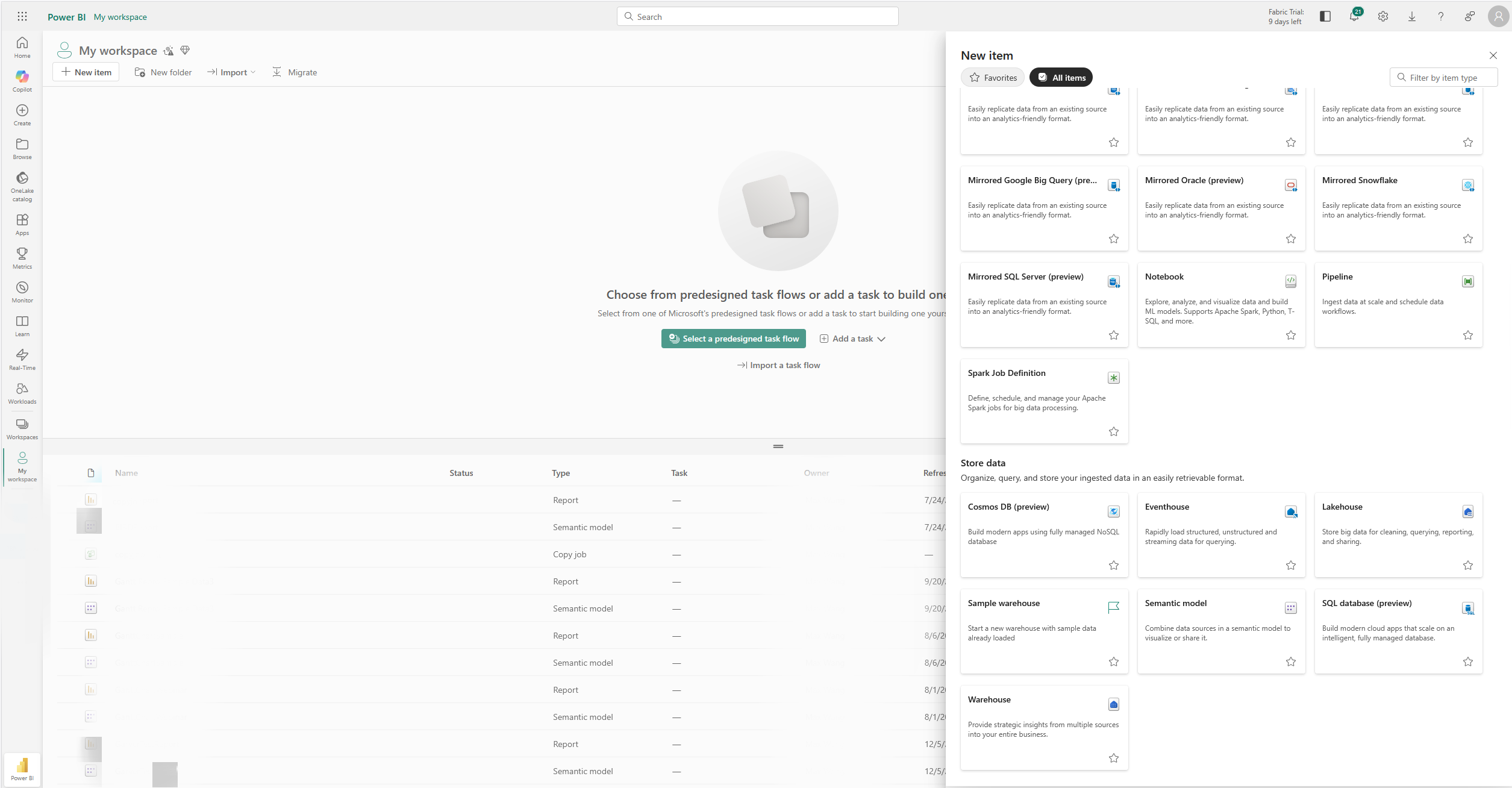

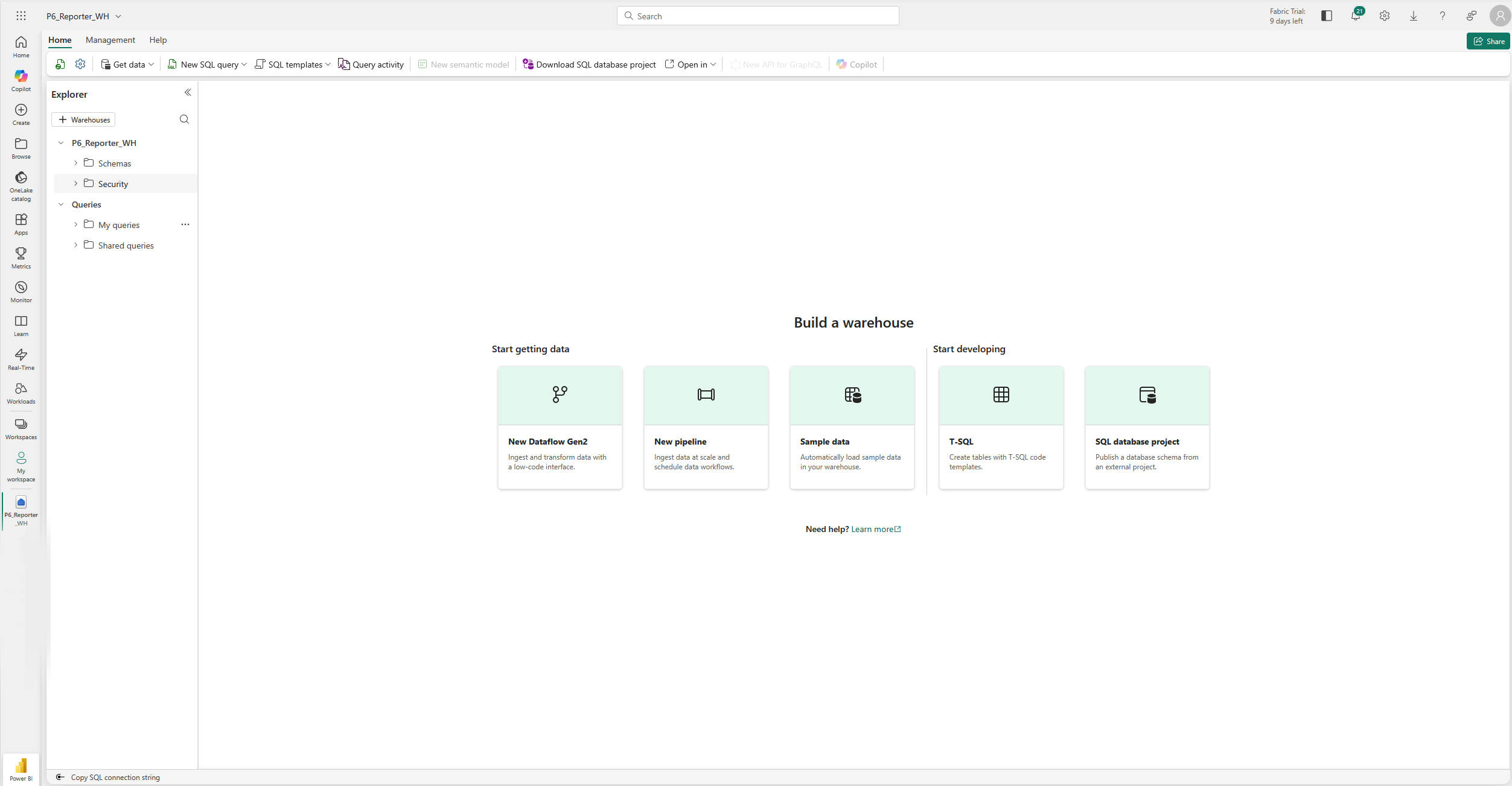

1) Create a Fabric Warehouse

- In your Fabric-backed workspace, go to New → Warehouse (e.g., P6_Reporter_WH).

Name it and then you will get a Warehouse.

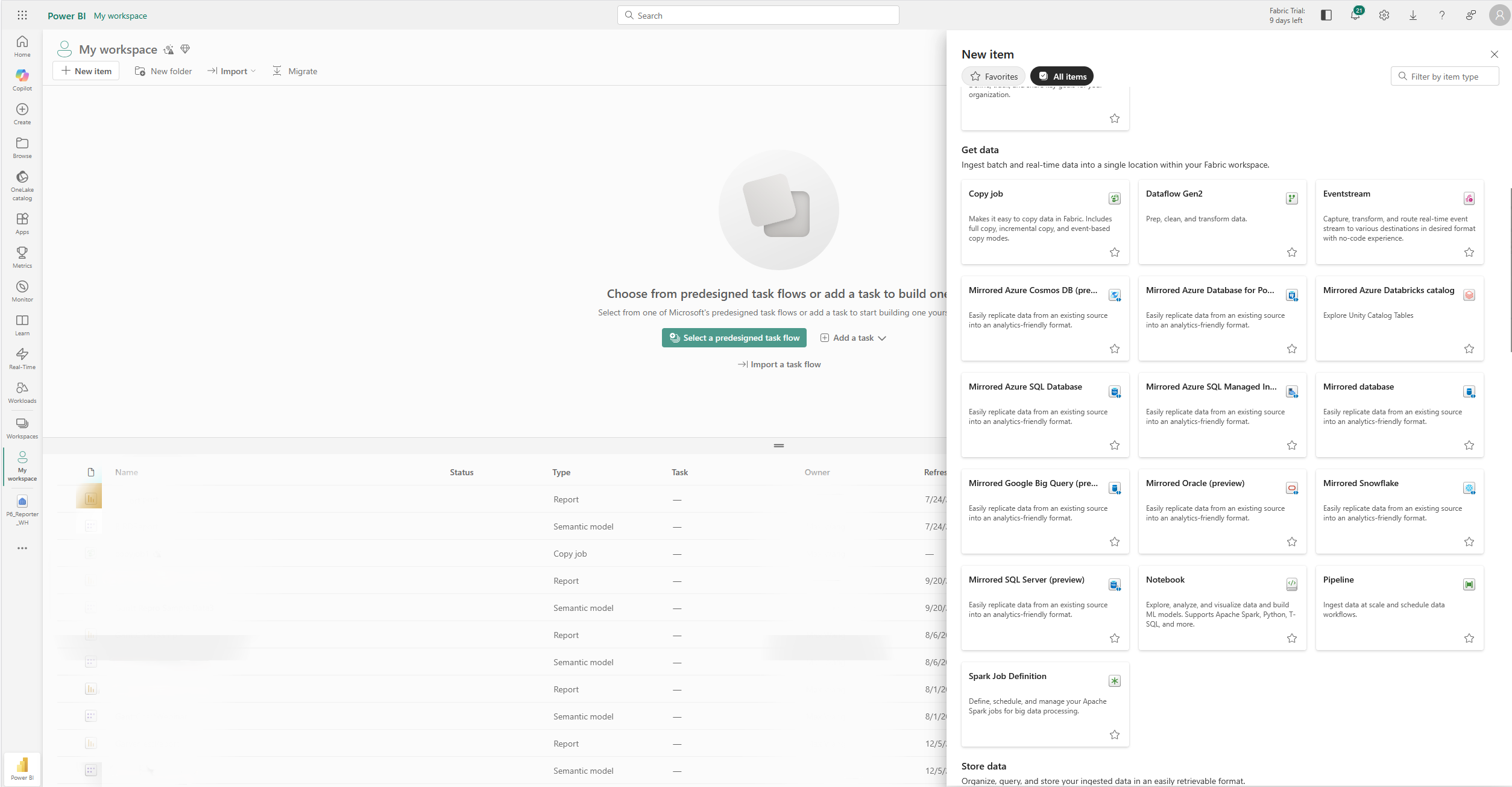

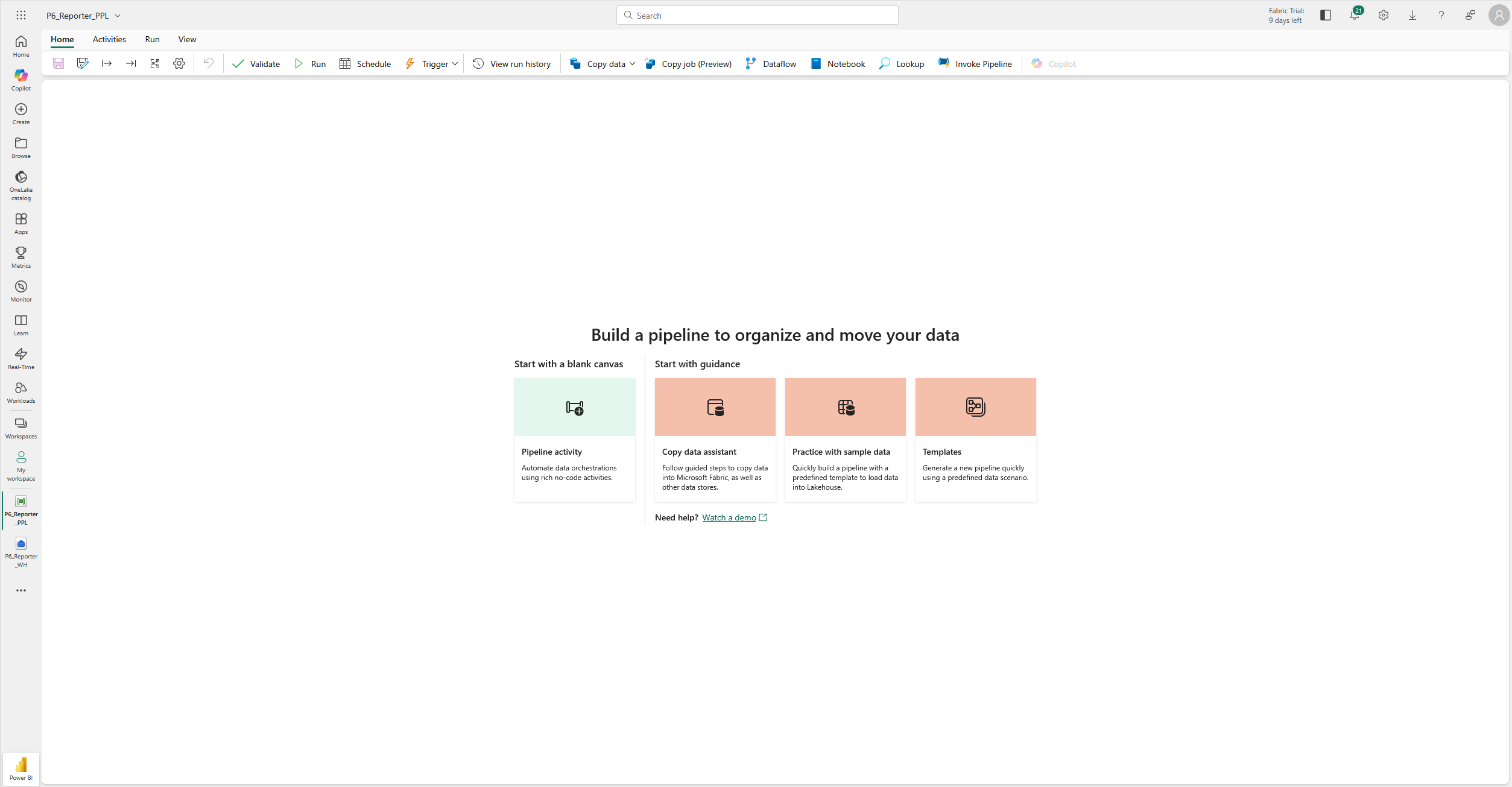

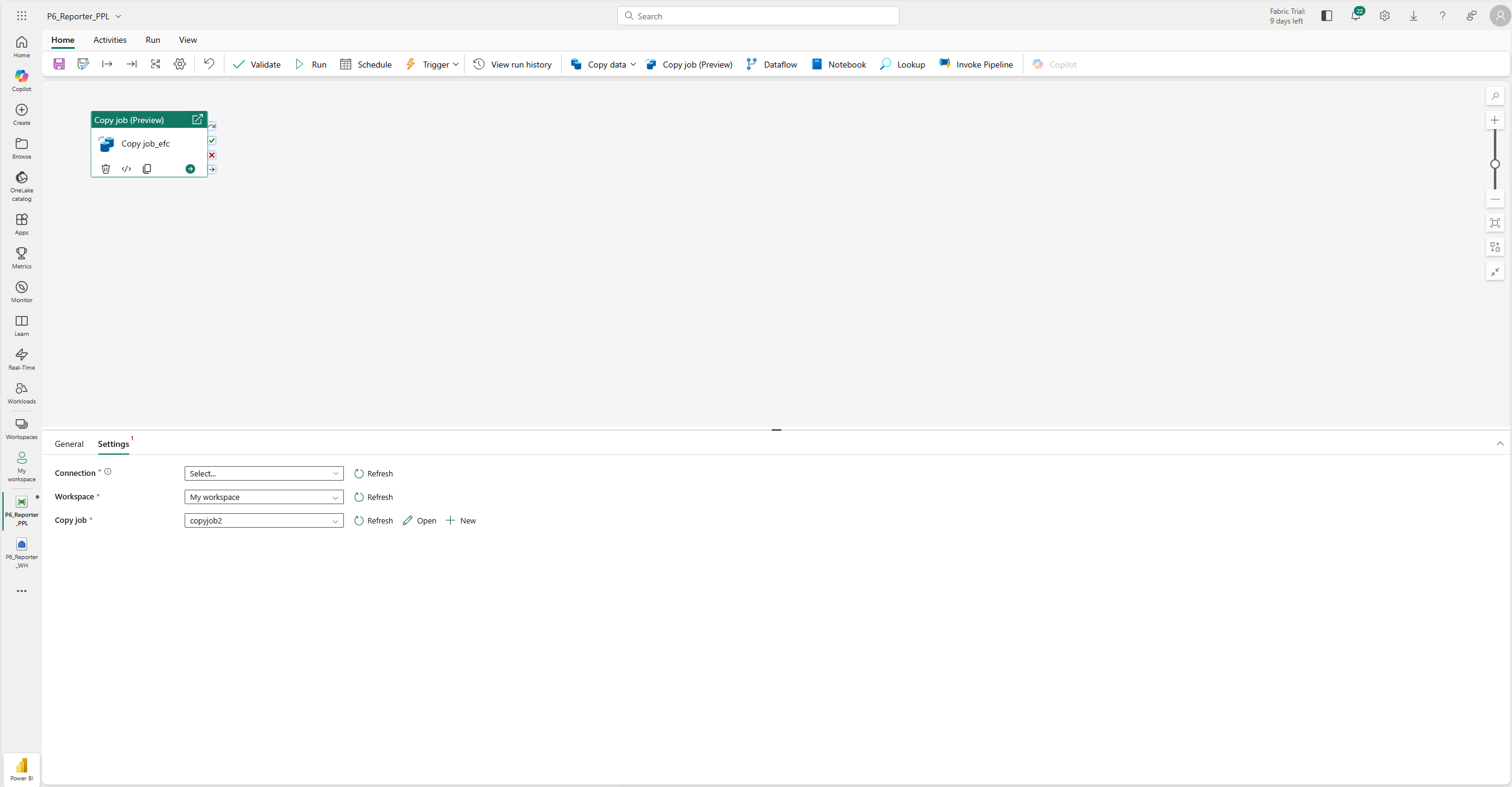

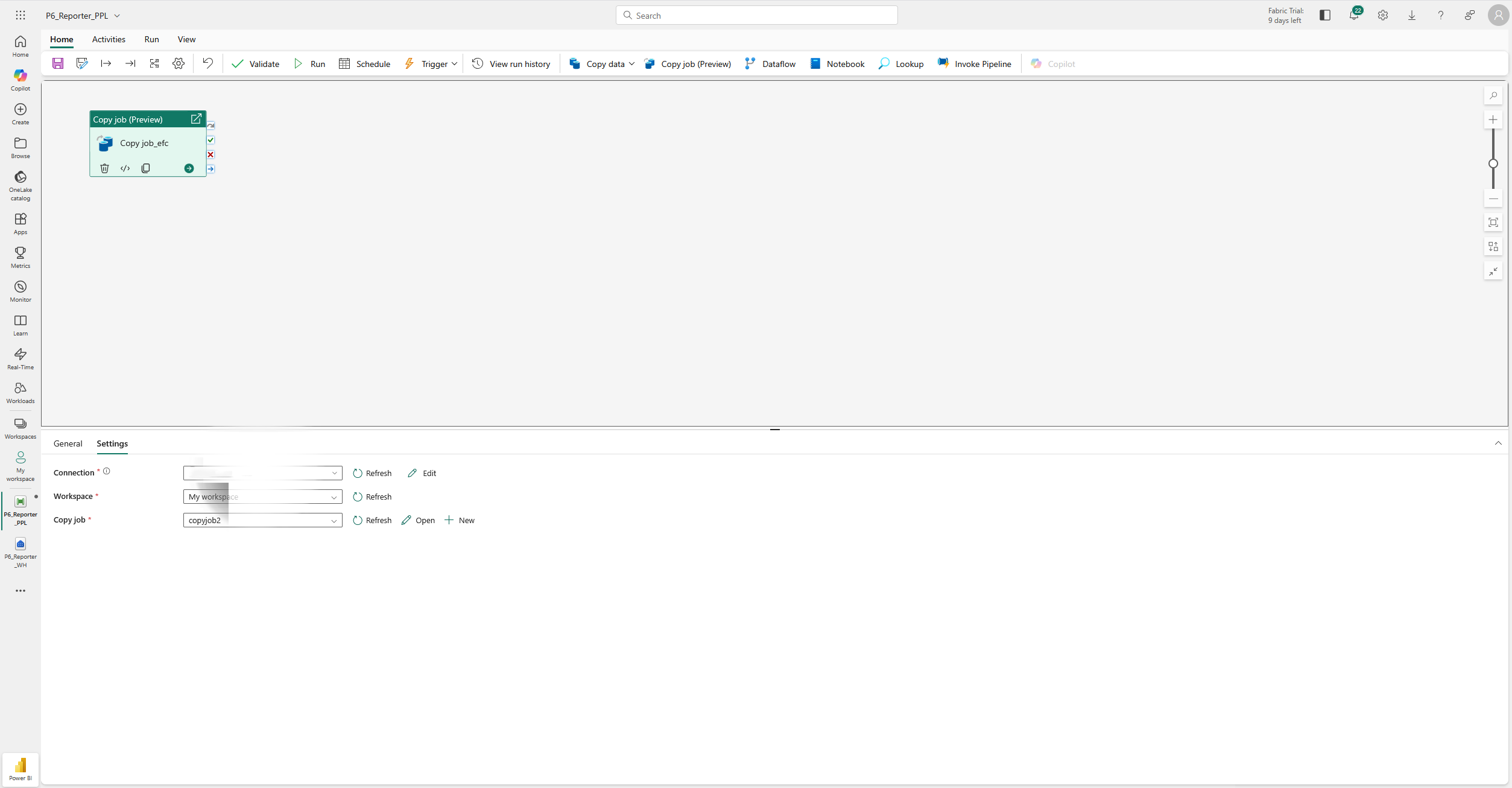

2) Pipeline: Copy P6-Reporter Database → Warehouse

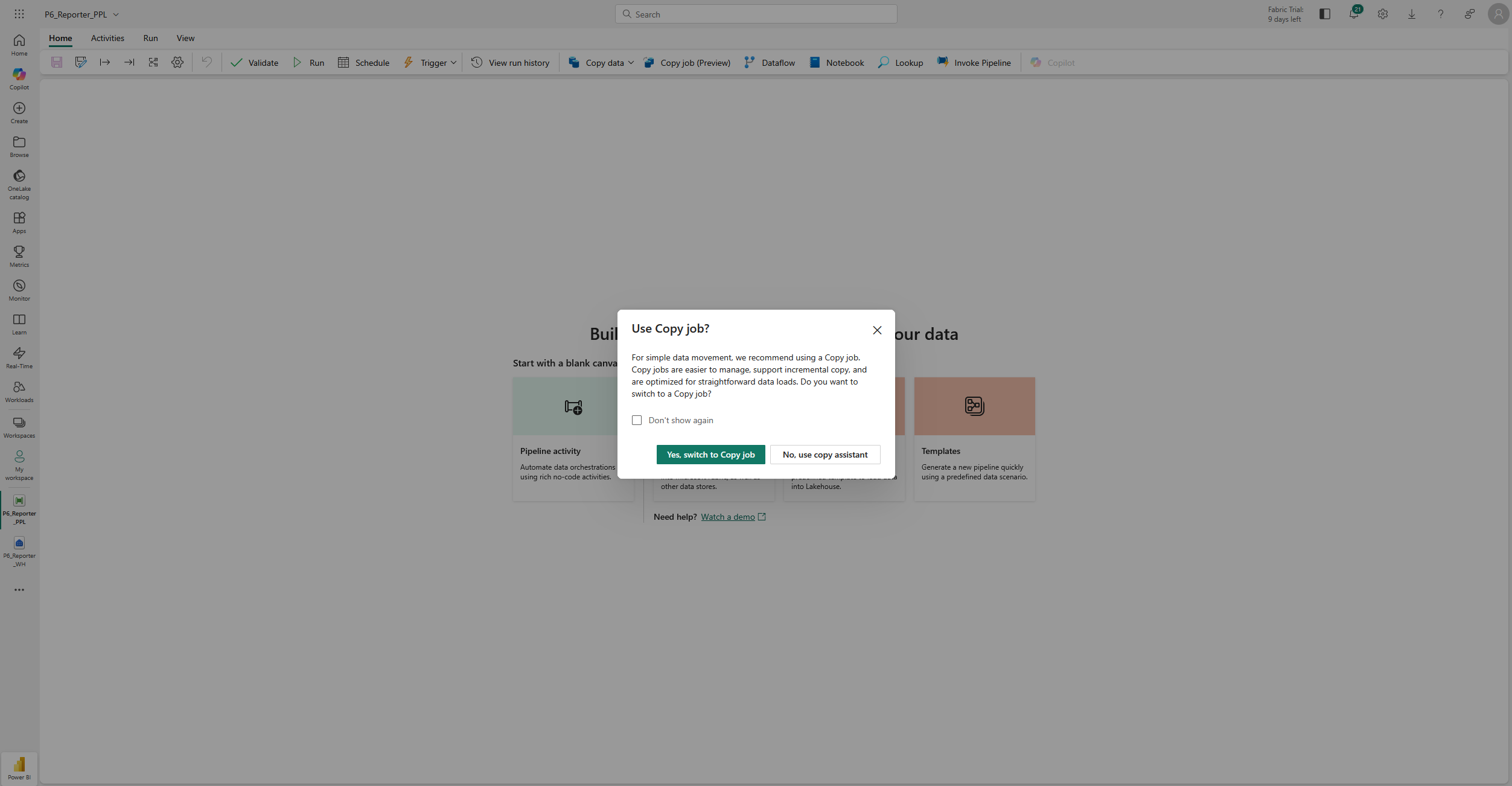

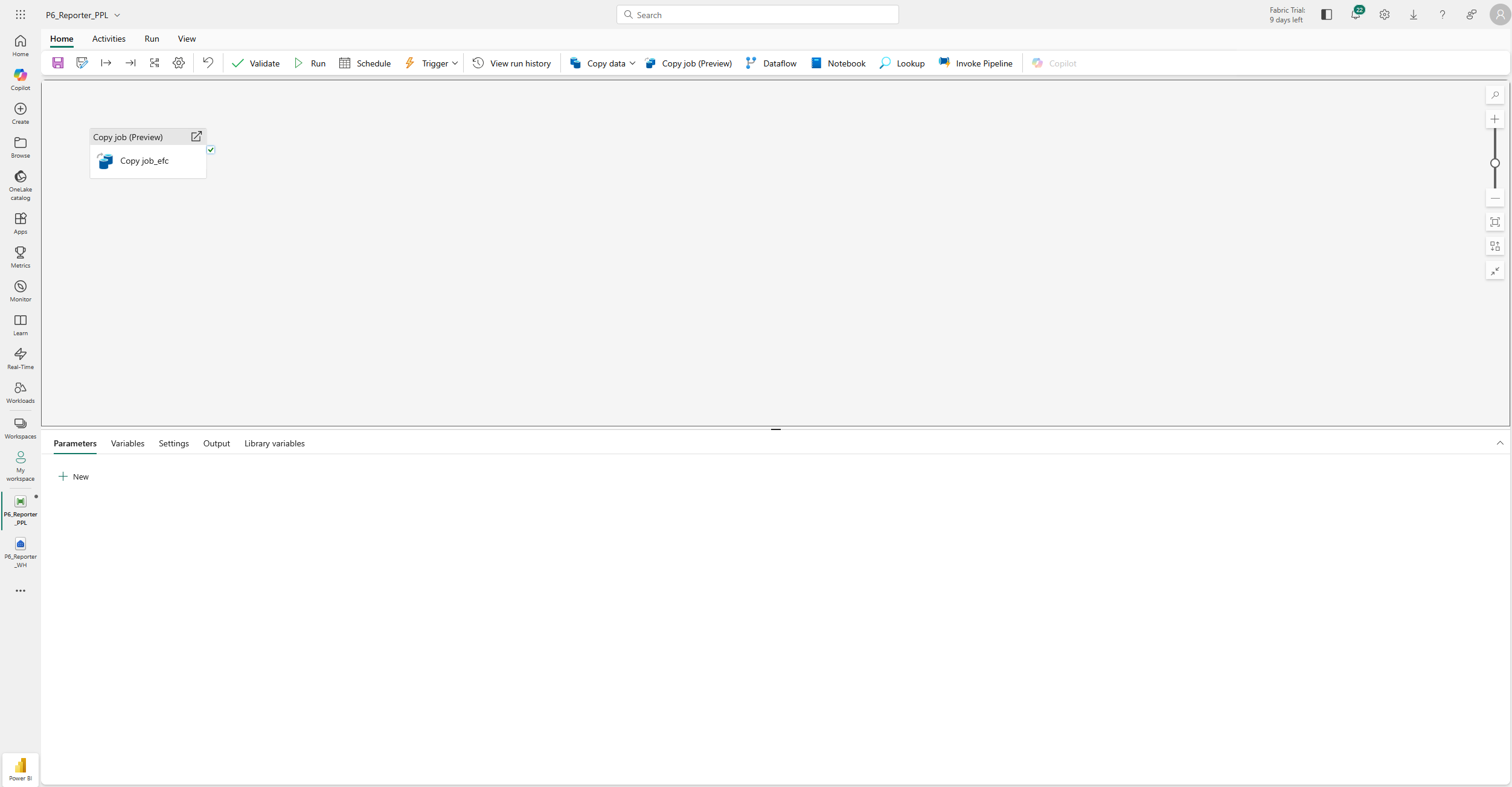

- New → Data pipeline

Name it, then you will get a pipeline. Let’s choose “Copy data assistant” to start.

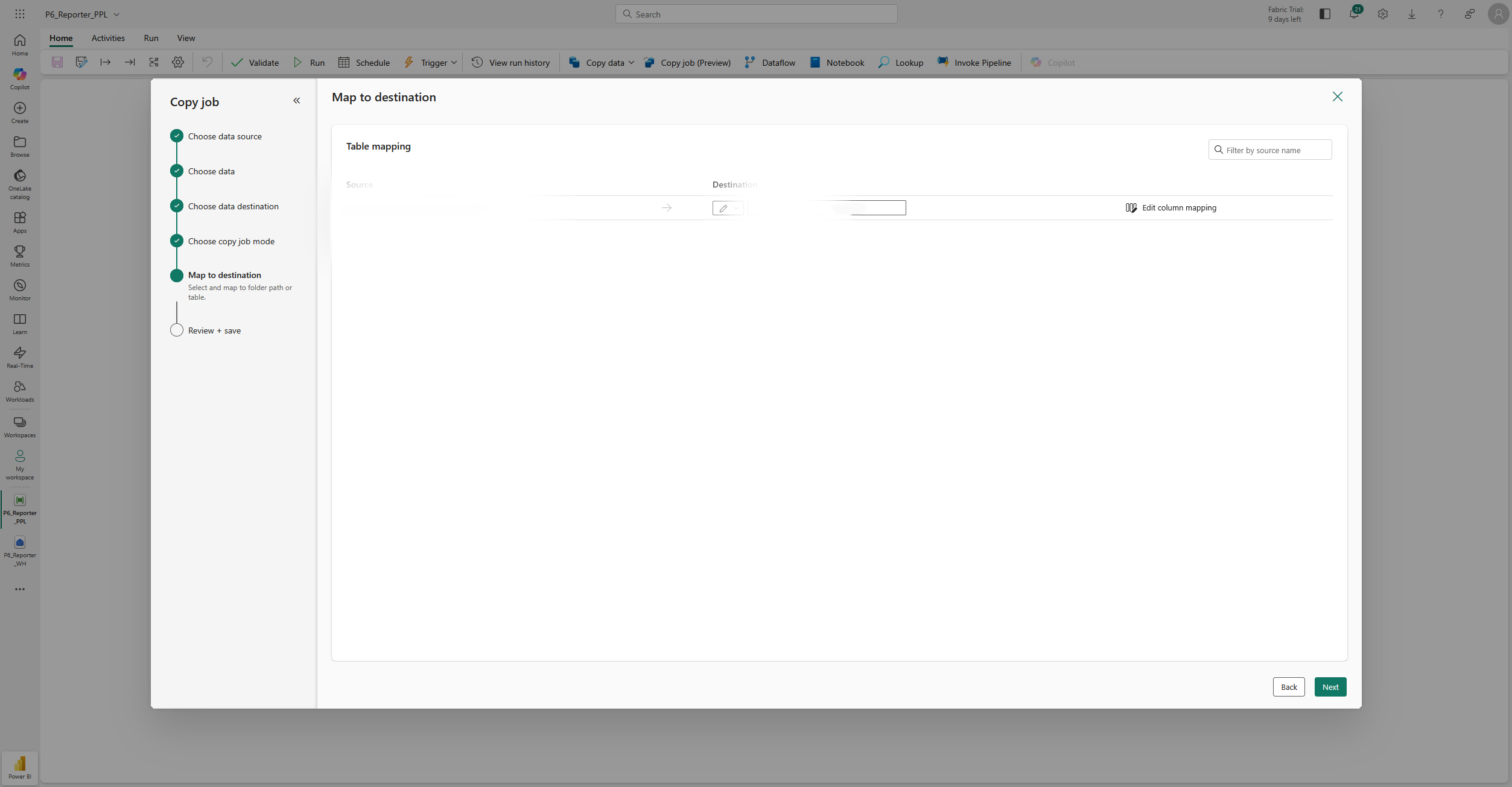

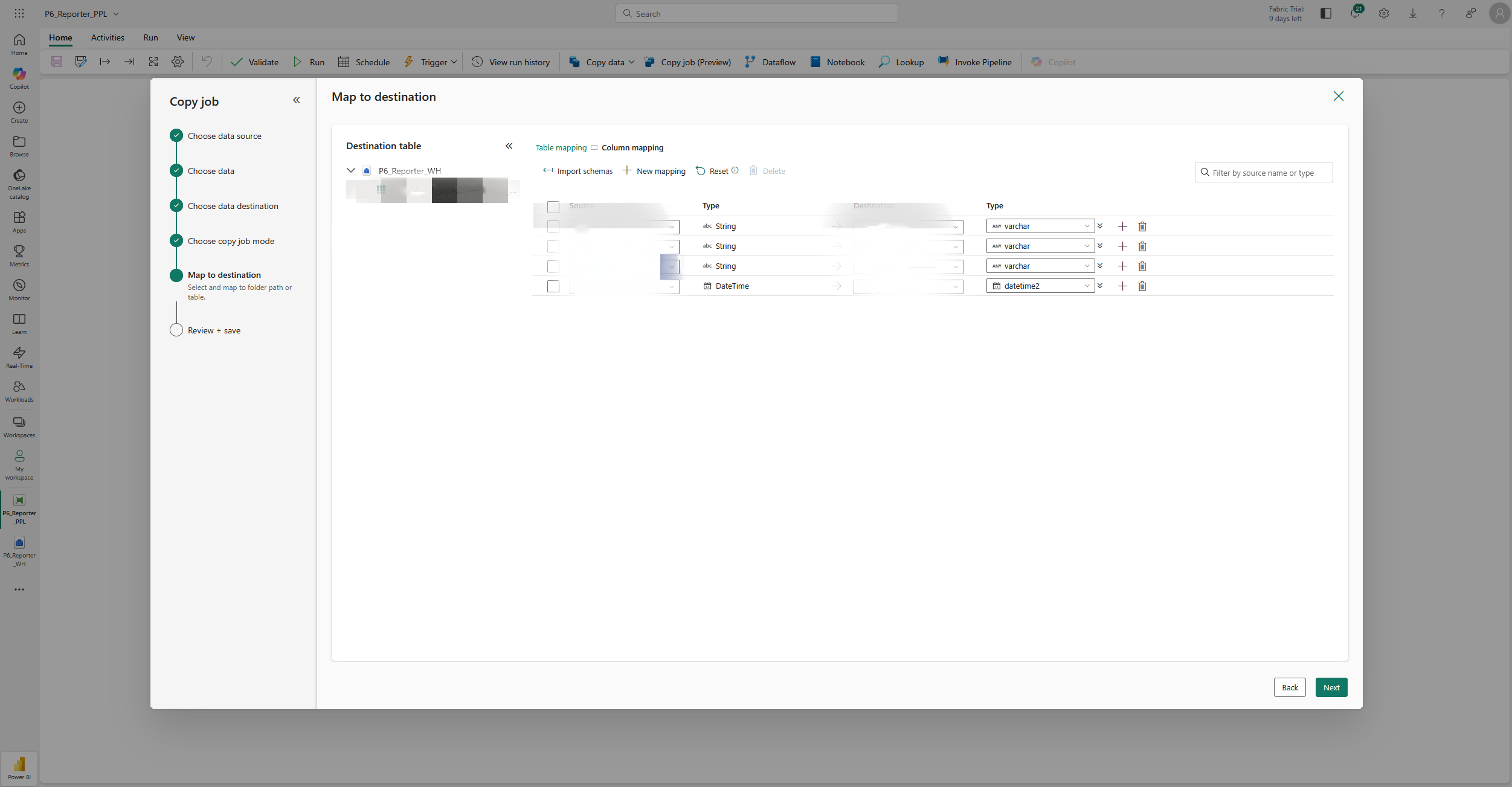

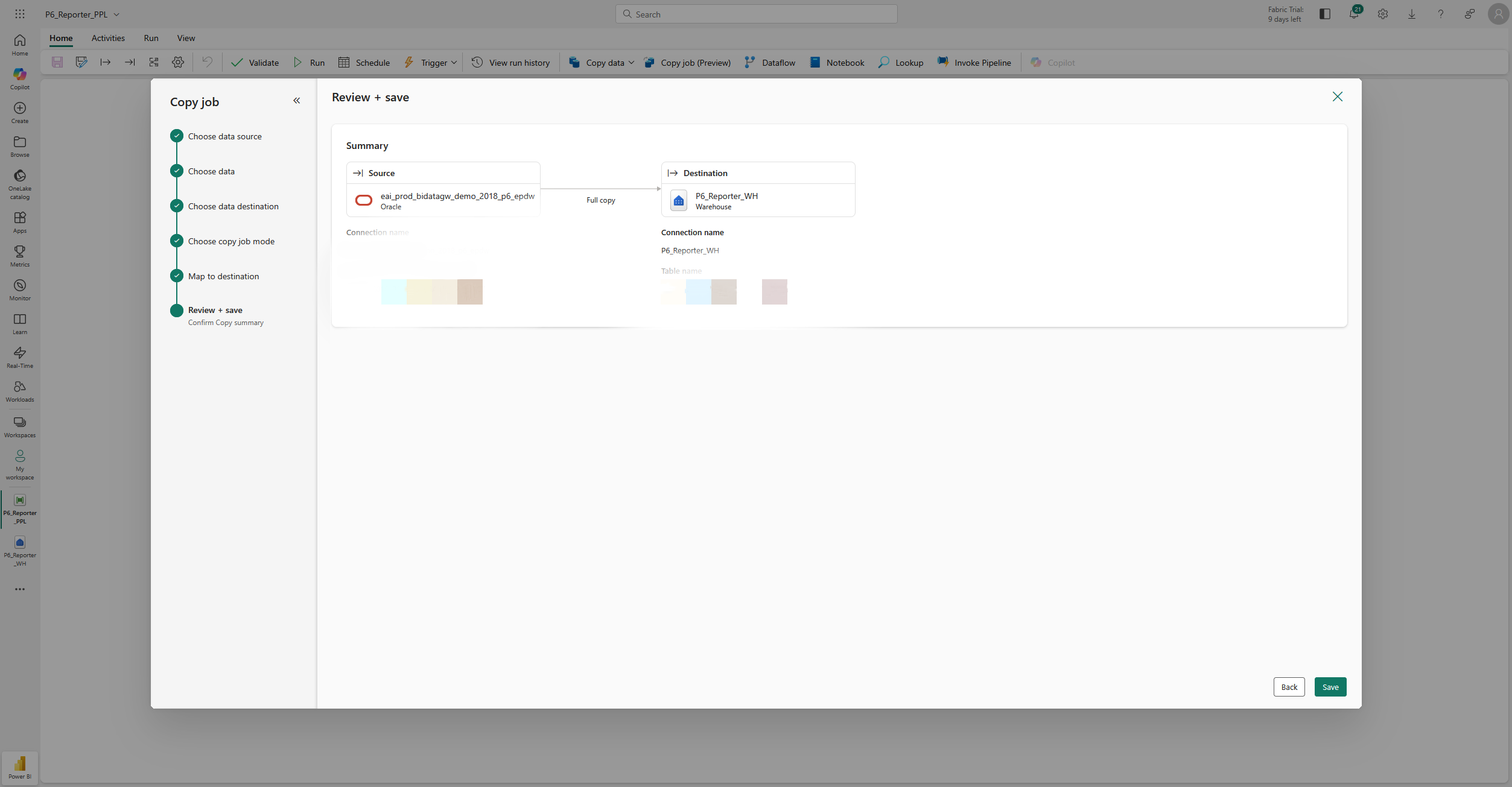

- Copy job:

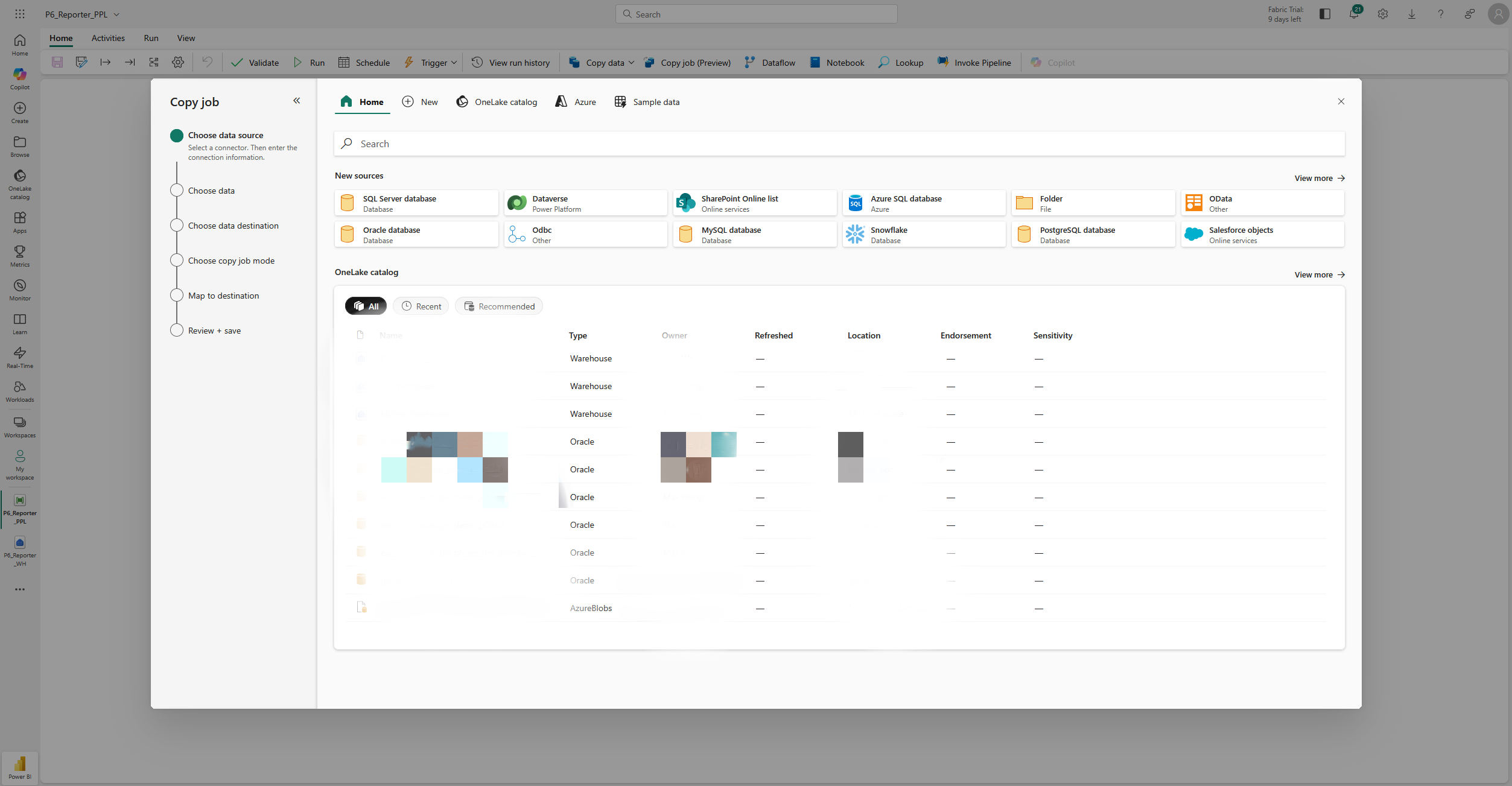

- Source:

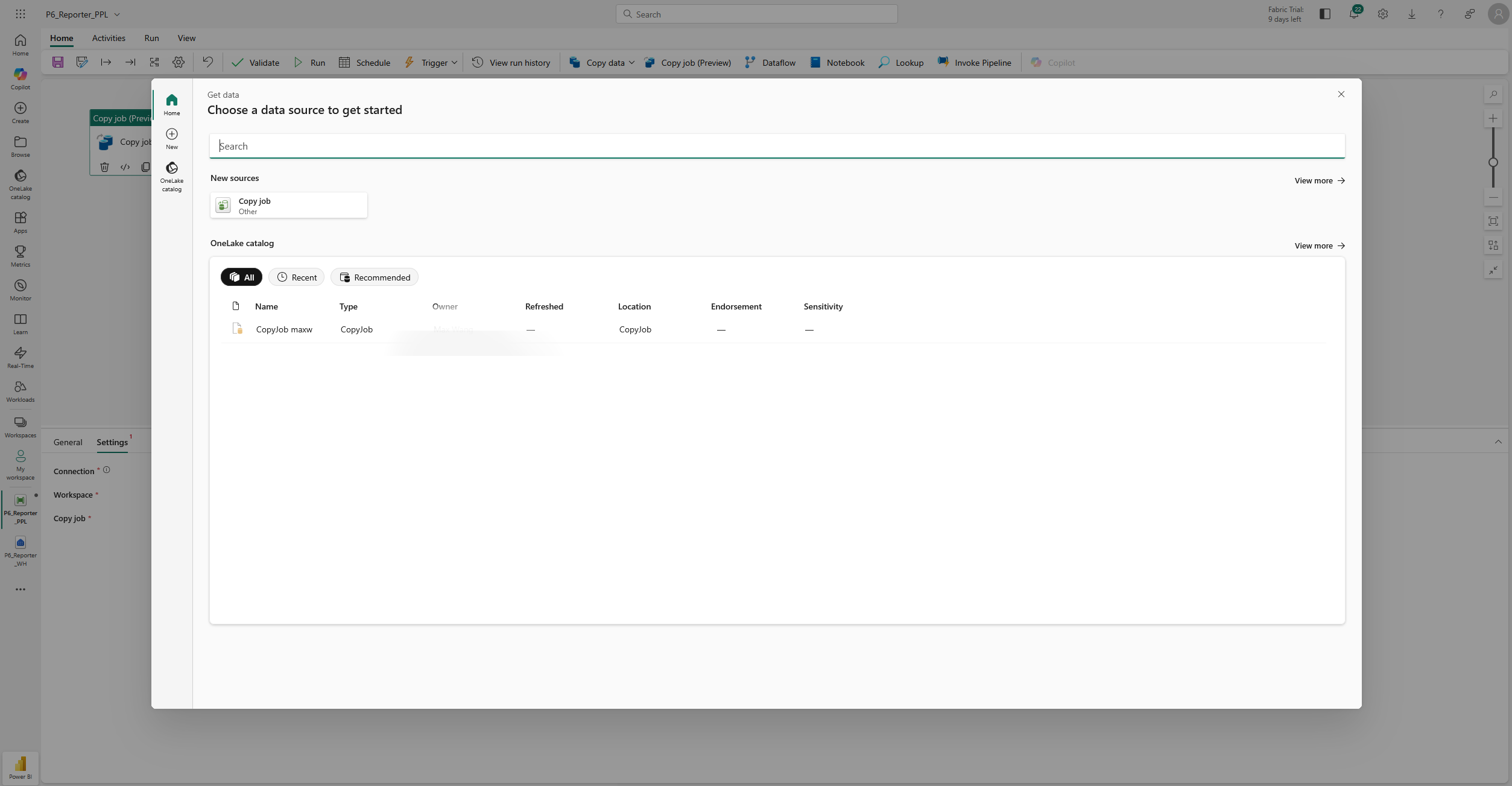

- Choose data:

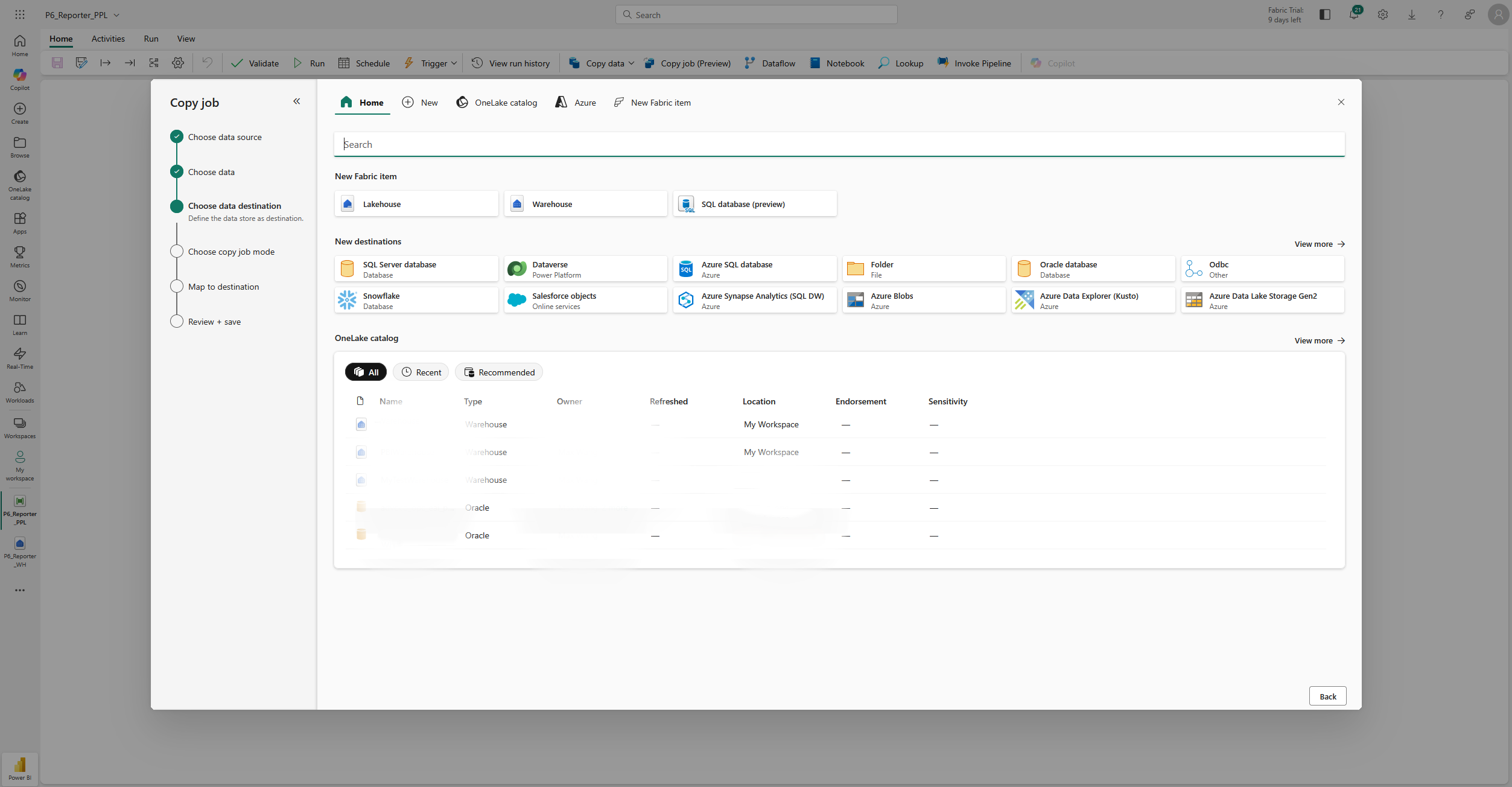

- Destination:

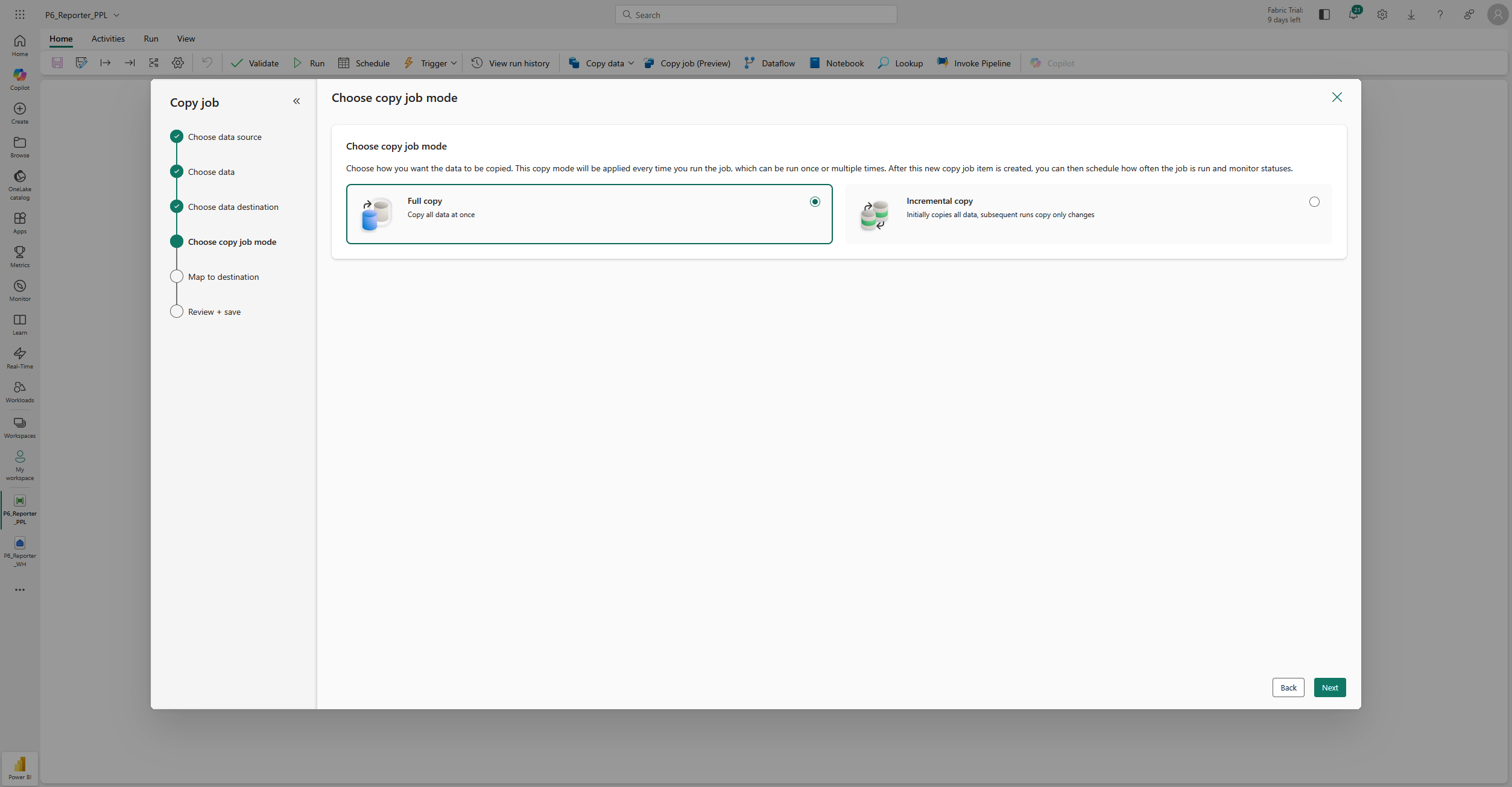

- Job mode:

- Mapping:

- Save:

- Connection:

Switch to Settings and select “Browse all” for Connection.

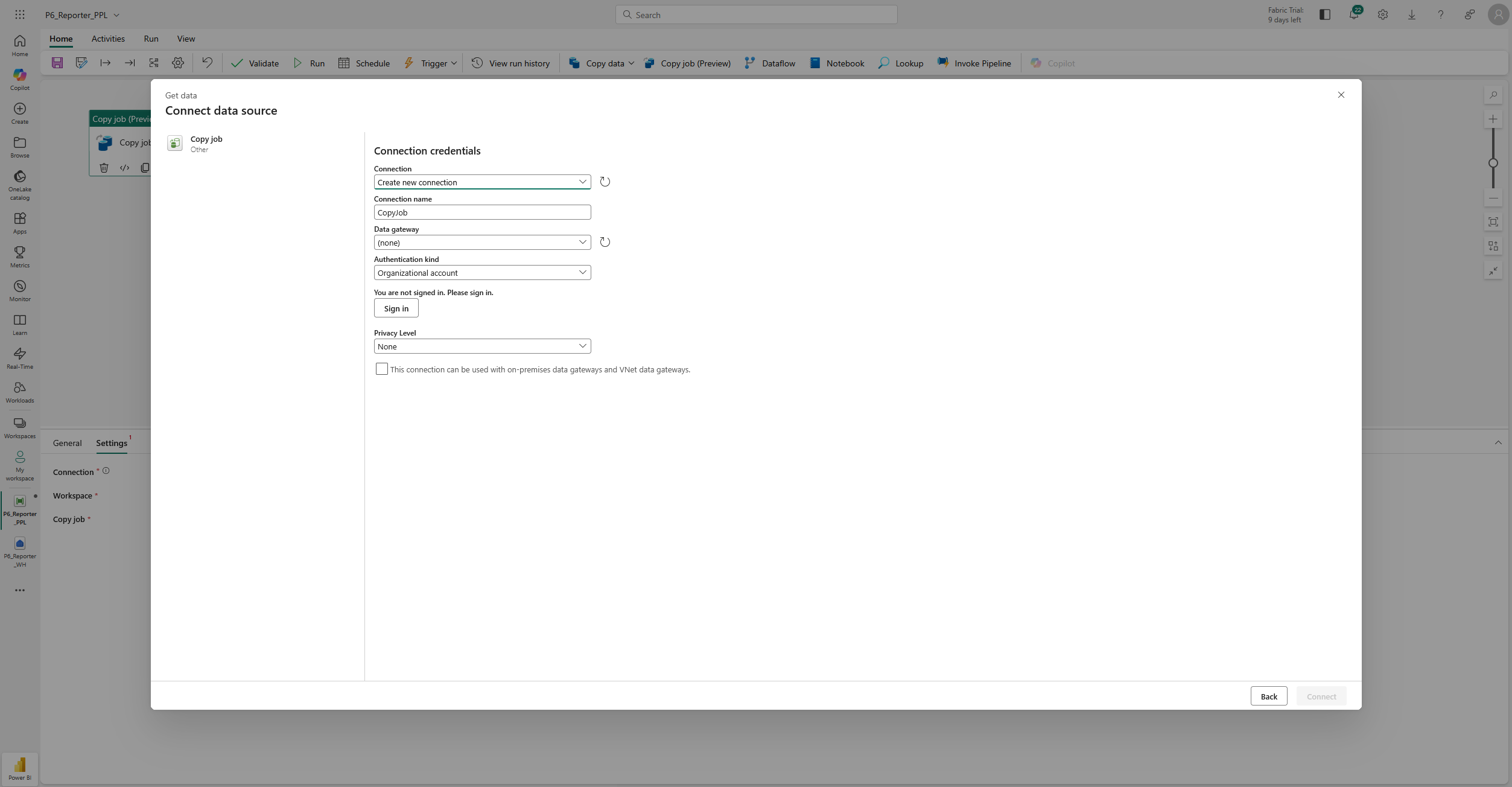

Make a new one if you don’t have it already.

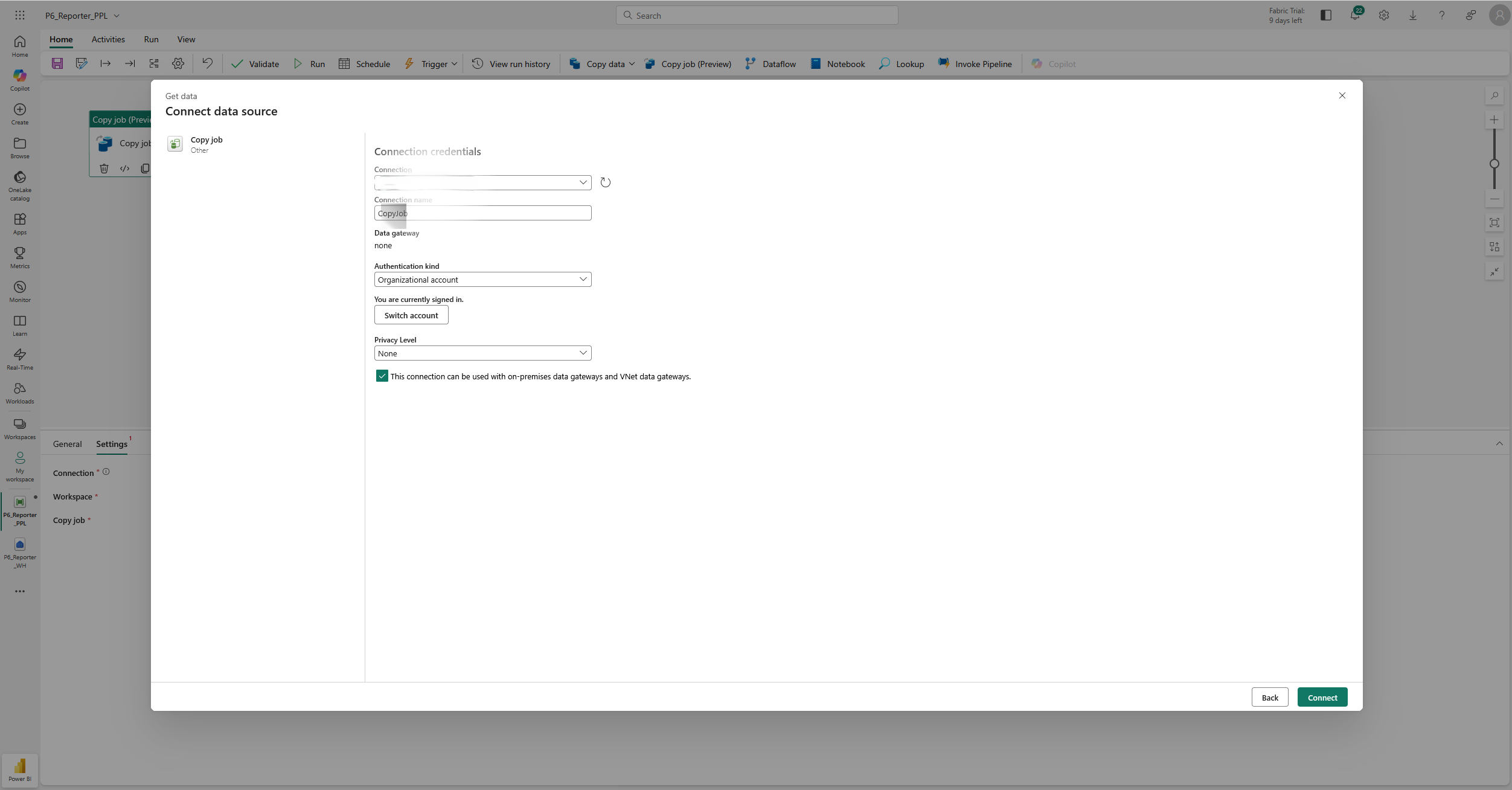

You may need to sign in first.

Remember to check the last option: “This connection can be used with on-premises data gateways and VNet data gateways.”

You are all set!

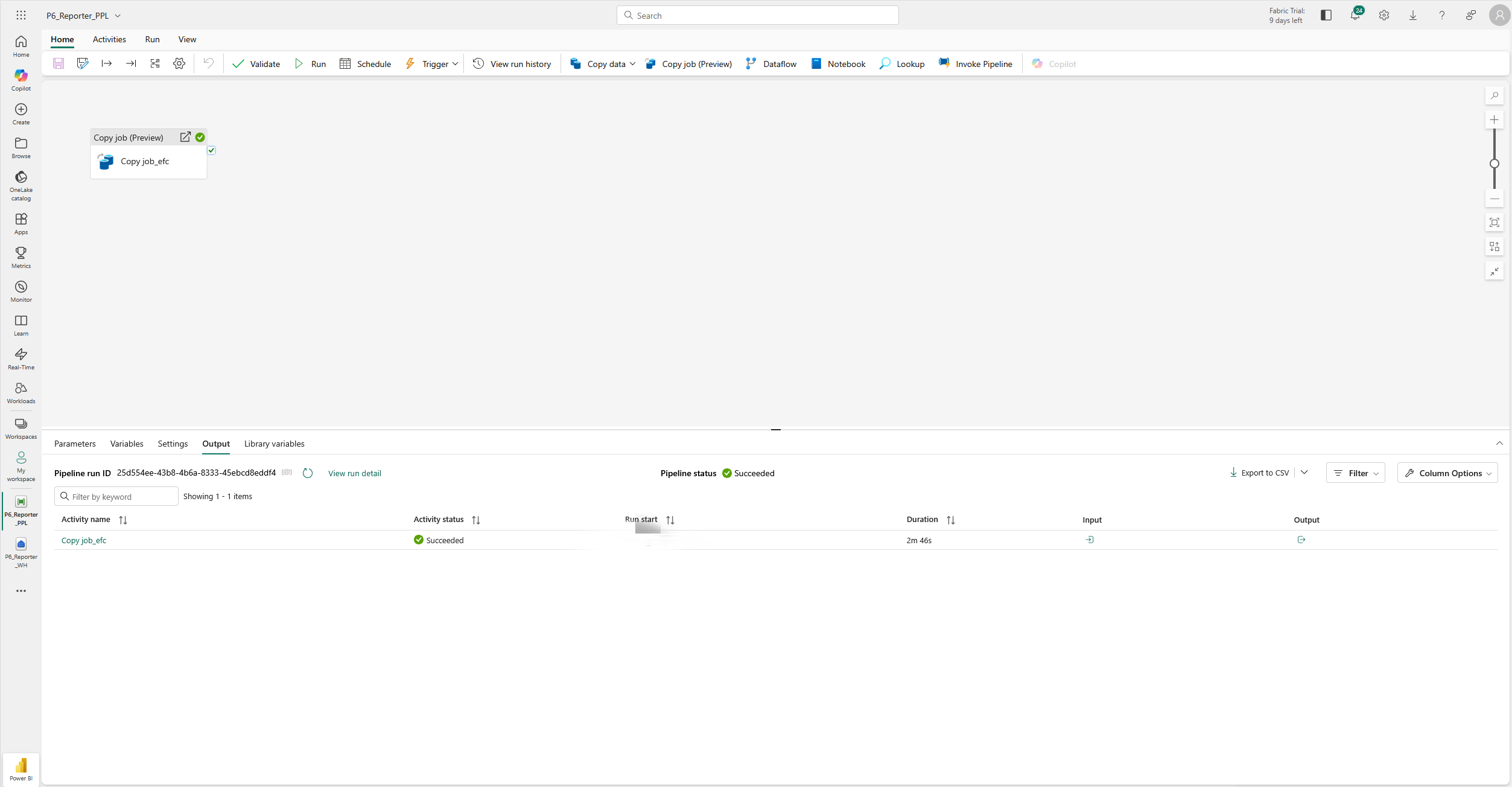

Run the pipeline and wait for it to complete.

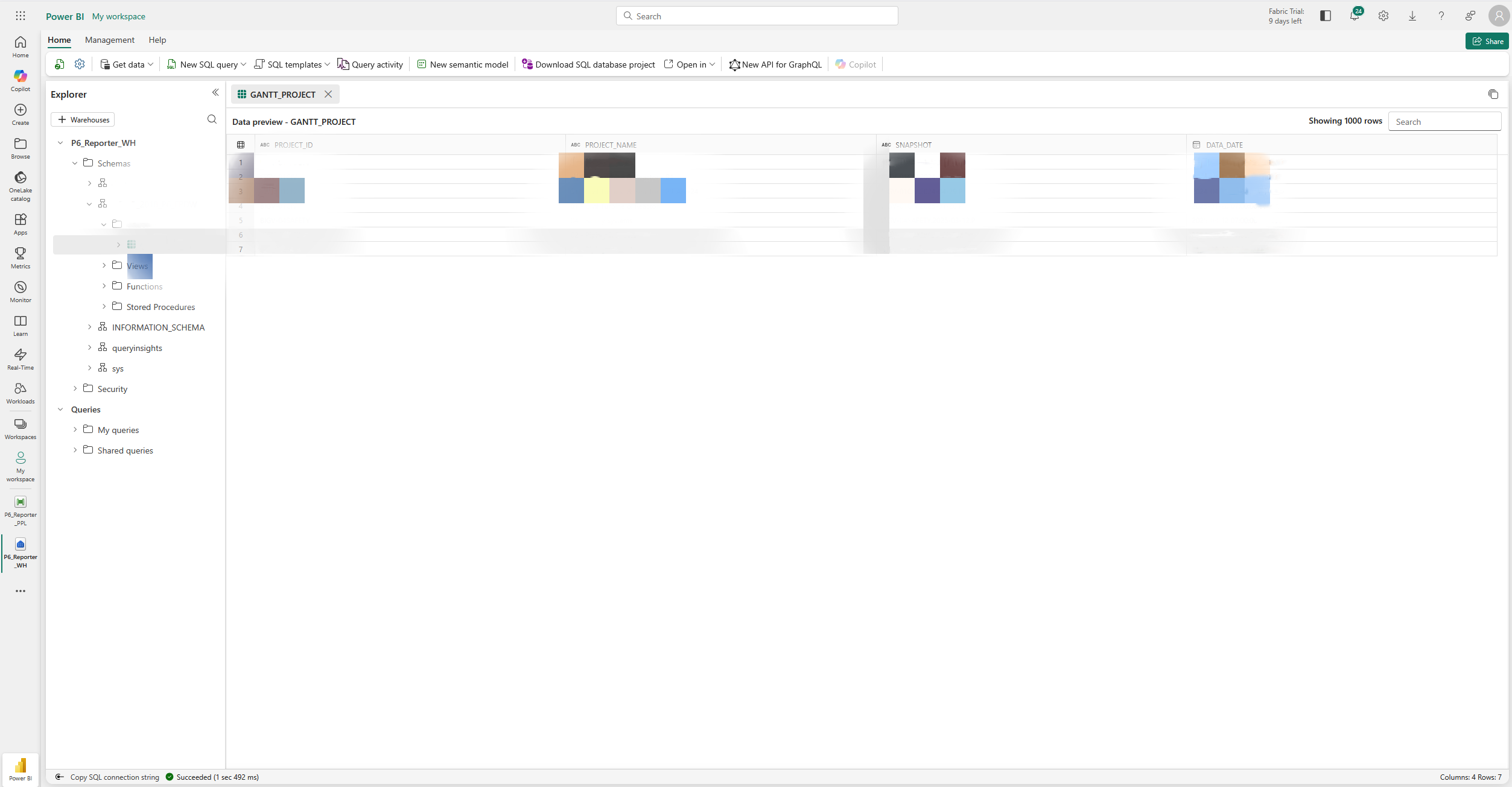

- Quick validation:

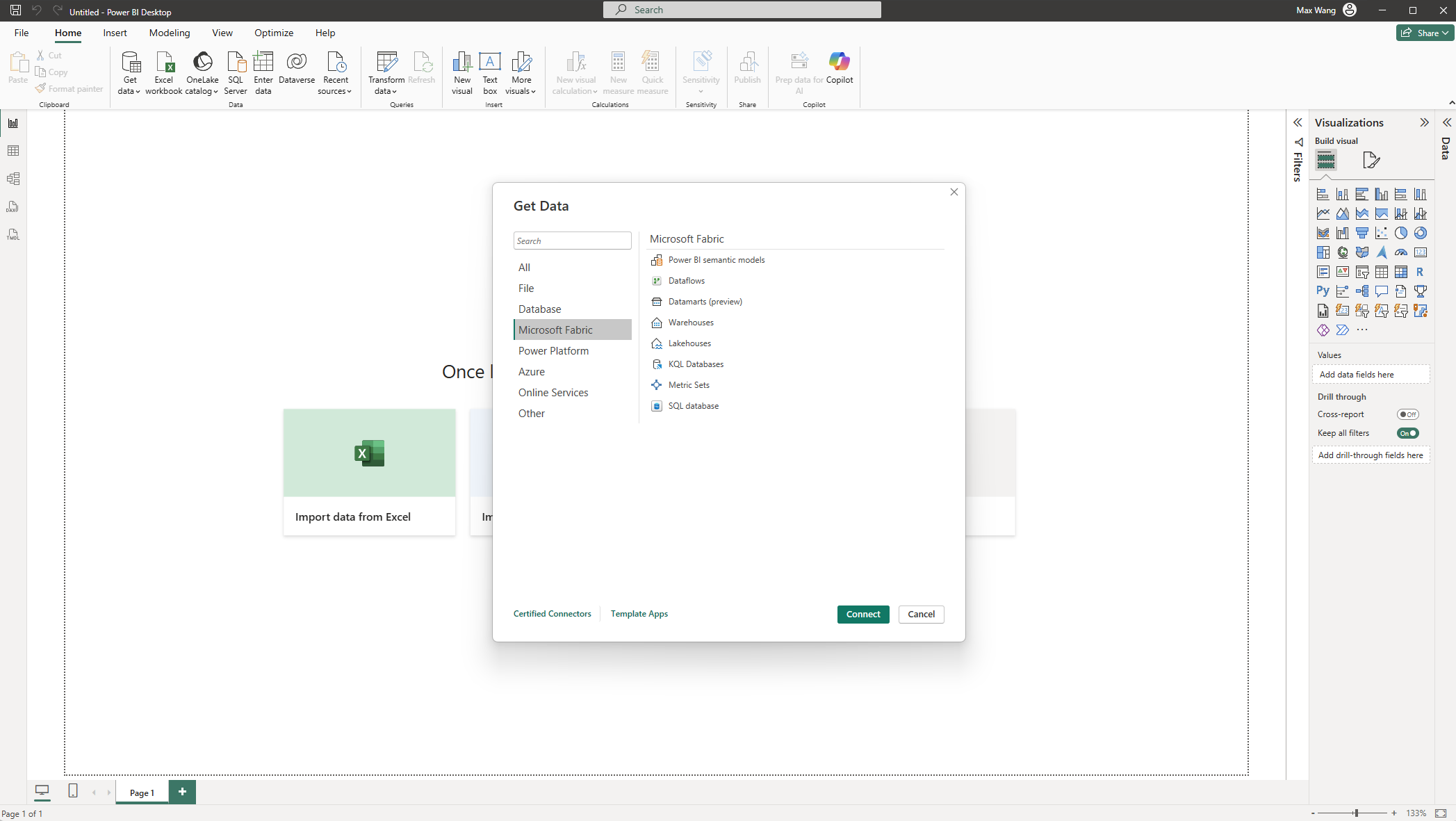

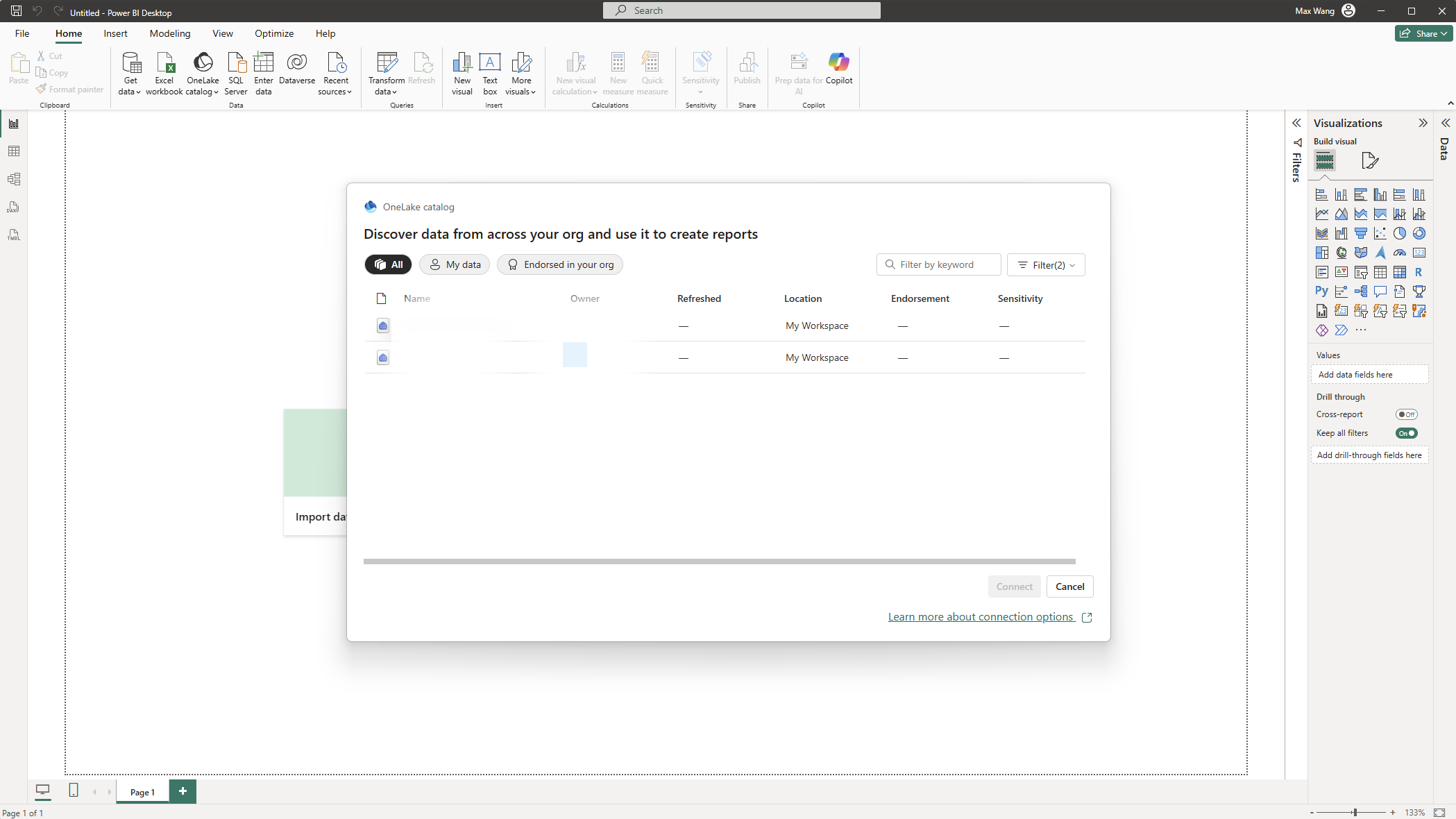

3) Build a Power BI Report on the Warehouse

- In Power BI Desktop: Get Data → Microsoft Fabric → Warehouses.

- Pick your Warehouse and the freshly loaded tables.

- Choose a connection/storage mode:

- Import for compact models and offline analysis.

- DirectQuery for near-real-time reads against Warehouse.

- Direct Lake (if available for your scenario).

- Model relationships, author DAX measures, design visuals.

4) Publish & Refresh

- Publish the PBIX to the same workspace for simpler governance.

- Import models: configure dataset refresh (no on-prem Gateway needed now—the source is Warehouse).

- DirectQuery/Direct Lake: typically minimal dataset refresh; ensure the Pipeline cadence keeps Warehouse current.

FAQs & Field Notes: P6-Reporter to Fabric

Does this replace the Gateway?- No. The Gateway remains essential to move on-prem database into Fabric. What changes is its purpose—from serving report refreshes directly to feeding Fabric.

- Use a reliable watermark (timestamp or monotonically increasing ID). Start with a full load; then switch to incremental with upsert semantics.

- Check number precision (e.g., NUMBER(38, x)), timestamps/time zones, and reserved words. Normalize casing and schemas in the Warehouse.

What Stays the Same (And Why That’s Good)

- P6-Reporter continues producing the curated Primavera P6 analytics that your teams rely on.

- P6-Reporter database remains your system of record.

- Power BI continues as the visualization layer.

Where This Takes You Next

- Add a Lakehouse lane for large or semi-structured data.

- Expose Warehouse tables across multiple semantic models.

- Enrich P6-Reporter data with ERP/CMMS sources using Fabric pipelines.

If your organization runs Primavera P6 and wants clearer, faster, and more governable reporting, P6-Reporter gives you the curated data layer—and now Fabric gives you another powerful way to deliver it in Power BI.

About the Author

Max Wang - Integration Specialist

Max Wang is an Integration specialist at Emerald Associates, leveraging his expertise to deliver innovative solutions. He is responsible for managing products such as P6-Loader and the Emerald BI-GanttView, among other tools.

Outside of work, Max is committed to continuous learning to stay ahead of the curve. He is also a passionate video blogger who delves into the technical aspects of popular games. His videos provide in-depth technical analyses using machine learning and other advanced methodologies.